Using neuromuscular signals to control prosthetic hands could make these powered limb replacements easier for patients to control, according to researchers at North Carolina State University and the University of North Carolina at Chapel Hill. Specialized computer models are the key to the researchers’ approach to making prosthetic limb behaviour more intuitive and could also be applied to other areas such as computer-aided design.

Prosthetic arms and hands currently on the market use pattern recognition technology and machine learning to effectively train the device to recognize and react to changes in muscle activity. For example, when repeated often enough, certain muscle movements will signal a prosthetic hand to either open or close to allow the user to grasp something.

“Pattern recognition control requires patients to go through a lengthy process of training their prosthesis,” said He (Helen) Huang, a professor in the joint biomedical engineering program at North Carolina State University and the University of North Carolina at Chapel Hill. “This process can be both tedious and time-consuming. We wanted to focus on what we already know about the human body.”

In contrast, Huang and colleagues used computer models of the way a limb behaves – including their hand, wrist and forearm movements – in order to translate that behaviour into a prosthetic. The researchers published the details of their technology in the journal IEEE Transactions on Neural Systems and Rehabilitation Engineering.

“This is not only more intuitive for users, it is also more reliable and practical,” said Huang. “That’s because every time you change your posture, your neuromuscular signals for generating the same hand/wrist motion change. So, relying solely on machine learning means teaching the device to do the same thing multiple times; once for each different posture, once for when you are sweaty versus when you are not, and so on. Our approach bypasses most of that.”

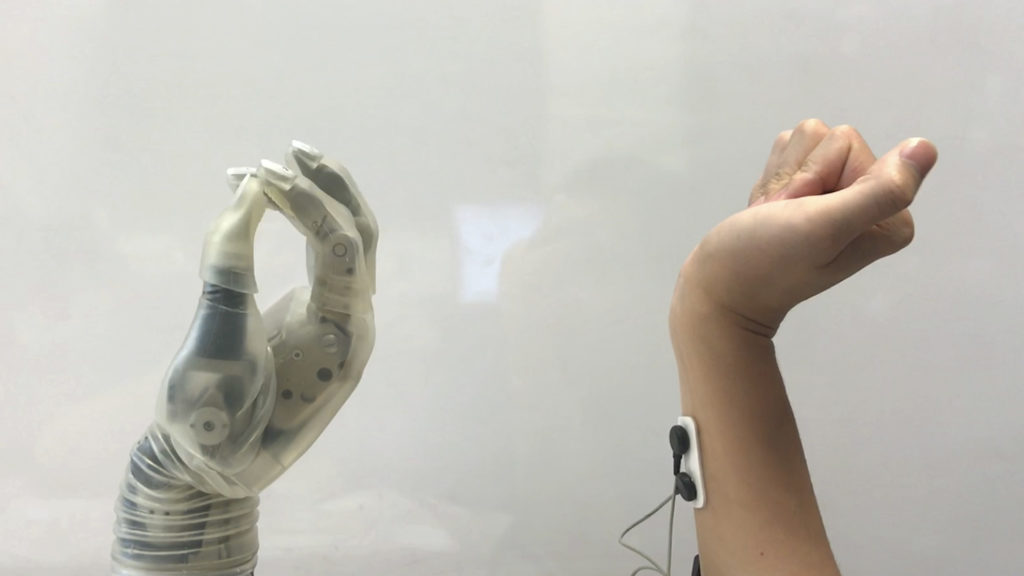

In order to eliminate this process, the researchers used movement data to construct a generic model of the musculoskeletal system of the arm. Using electromyography sensors, the team tracked the forearm movements of six able-bodied volunteers as they completed multiple tasks with their arm. The neuromuscular signals detected by the sensors were compiled into a model which was used to control a prosthetic hand.

“When someone loses a hand, their brain is networked as if the hand is still there,” said Huang. “So, if someone wants to pick up a glass of water, the brain still sends those signals to the forearm. We use sensors to pick up those signals and then convey that data to a computer, where it is fed into a virtual musculoskeletal model. The model takes the place of the muscles, joints and bones, calculating the movements that would take place if the hand and wrist were still whole. It then conveys that data to the prosthetic wrist and hand, which perform the relevant movements in a coordinated way and in real time – more closely resembling fluid, natural motion.

“By incorporating our knowledge of the biological processes behind generating movement, we were able to produce a novel neural interface for prosthetics that is generic to multiple users, including an amputee in this study, and is reliable across different arm postures.”

After just basic training with the prosthetic, both able-bodied individuals and amputees were able to control the device. Encouraged by this success, the research team is now looking for feedback from other individuals who have lost part of their hand and arm below the elbow – known as a transradial amputation – to make sure the technology helps these patients gain a better quality of life.

Despite its success in early studies, the researchers believe their prosthesis technology won’t be available to patients anytime soon. First, the team must test their devices in clinical trials to provide evidence that their technology is better than machine learning.

“To be clear, we are still years away from having this become commercially available for clinical use,” said Huang. “And it is difficult to predict potential cost, since our work is focused on the software, and the bulk of cost for amputees would be in the hardware that actually runs the program. However, the model is compatible with available prosthetic devices.”

Join or login to leave a comment

JOIN LOGIN